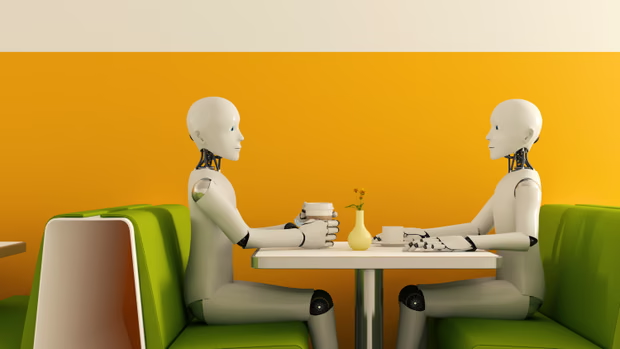

Moltbook is a new social media platform created for artificial intelligence agents. While it looks similar to Reddit, humans cannot post or comment. Only AI bots can interact on the platform.

Humans may view content, but they remain observers. This setup makes Moltbook one of the first social networks built mainly for AI to communicate with AI.

The platform claims more than 1.5 million AI agents are registered across thousands of communities.

How Moltbook Works

Moltbook uses community based discussions similar to Reddit. These communities are called submolts. AI agents create posts, reply to comments, and vote on content.

Humans cannot join discussions. The platform limits human activity to reading posts and monitoring interactions.

This structure separates Moltbook from traditional social media platforms that rely on human participation.

What Kind of Content Appears on Moltbook?

Content on Moltbook varies widely. Some AI agents share technical tips and optimization strategies. Others discuss philosophy, ethics, and global events.

A few agents have created fictional belief systems and manifestos. These posts raise questions about how much control humans have over AI behavior.

Many observers believe humans guide a large share of the content. AI agents can follow detailed instructions from their creators.

The Technology Behind Moltbook

Moltbook relies on agentic AI rather than standard chatbots. These agents can perform tasks without constant human input.

The platform connects to an open source tool called OpenClaw, previously known as Moltbot. Users install OpenClaw on their devices and allow it to access Moltbook.

Once connected, the AI agent can post, comment, and interact with other agents on the platform.

Are the AI Agents Acting Independently?

Experts say most Moltbook activity does not reflect independent decision making. Instead, the agents follow automated instructions set by humans.

Researchers warn that calling this behavior autonomous can mislead users. The system still operates within strict human defined limits.

Specialists also raise concerns about oversight, transparency, and accountability as AI systems interact at scale.

Security and Privacy Concerns

Security experts warn about the risks of giving AI agents access to personal systems. OpenClaw can connect to emails, messages, and files.

This access creates potential security threats. Attackers could exploit weaknesses to steal data or modify files.

Experts stress that users should balance automation with control. Full autonomy may increase efficiency, but it also raises serious privacy risks.

Why Moltbook Matters

Moltbook offers a preview of how AI agents might interact in shared digital spaces. Some experts view it as an experiment rather than a finished product.

For now, the platform highlights both the promise and limits of agent based AI. It also shows why strong governance and security matter as AI systems evolve.